Hi everyone 👋 - We just shipped some powerful features to Kiln as part of our v0.23.0 app release!

RAG Evals with Synthetic Q&A Data From Your Docs

Evaluating RAG or agents using RAG is tricky. An LLM-as-judge doesn't have the knowledge from your documents, so it can't evaluate if a response is correct. But giving the judge access to RAG biases the evaluation.

The solution: reference-answer evals. The judge compares results to a known correct answer. Building these datasets used to be a long manual process… until now.

Kiln now builds Q&A datasets for evals by iterating over your document store. The process is fully interactive and takes just a few minutes to generate hundreds of reference answers from your documents. Use it to evaluate RAG accuracy end-to-end, including whether your agent calls RAG at the right times with quality queries. Learn more in our docs.

Tool Use Evals: The Right Tool at the Right Time

It doesn't matter how well a tool works if it isn't invoked when needed. Kiln now includes a new eval for tool use that checks:

- A tool is called when needed

- A tool isn't called when it shouldn't be

- The parameters passed to the tool are correct

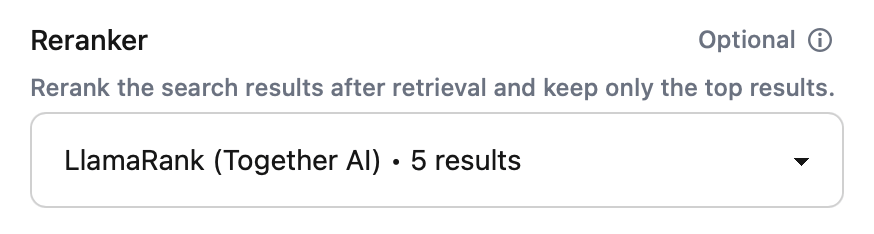

RAG Reranking

RAG reranking refines your search output by sorting and trimming it, so the LLM sees only the most relevant information.

To add reranking in Kiln, select a reranking option when creating a new search tool:

RAG Semantic Chunking

Semantic chunking splits your documents into chunks by meaning rather than length. This improves retrieval accuracy and ensures relevant content is returned to LLMs.

To use semantic chunking in Kiln, select a semantic chunking method when creating a search tool:

Kiln MCP Server: Agents and RAG

You can now deploy Kiln agents and RAG search tools as a MCP server, letting you integrate Kiln projects into other AI tools.

Kiln v0.23.0 App Release

Our new v0.23.0 app release includes all the features above, plus:

- Visual Schema Builder: define complex tool schemas in an interactive UI

- New Models: MiniMax M2, Kimi K2 Thinking, Qwen3 VL

- And more: embedding models on OpenRouter, fixed Vertex fine-tuning bug, more deterministic OpenRouter routing, and dozens of other improvements